Neural Network Tutorials - Herong's Tutorial Examples - v1.22, by Herong Yang

Impact of Learning Rate

This section provides a tutorial example to demonstrate the impact of the learning rate of a neural network model. If a neural network model starts to generate an oscillating training loss, the learning rate can be reduced to help to a better solution.

In the classical neural network model, the learning rate is important when error function has rough surface near the bottom. A learning rate that is too large will cause the model to oscillate over a possible solution.

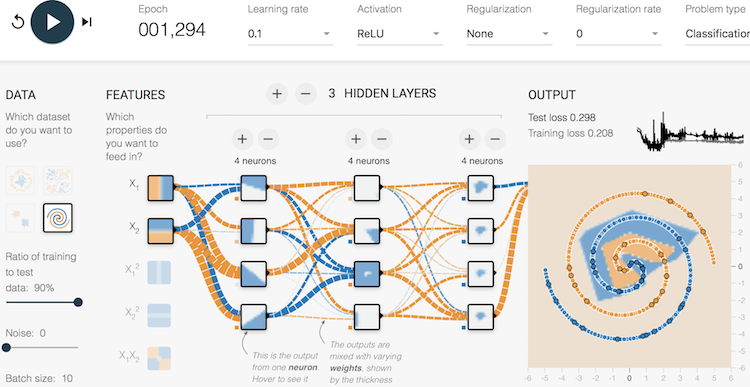

If you look at tests we did in the previous tutorial, we will see that the model with 3 layers and 4 neurons per layer failed converge to a better solution. A learning rate of 0.1 was used and it was probable too large, because training loss history is an oscillating curve.

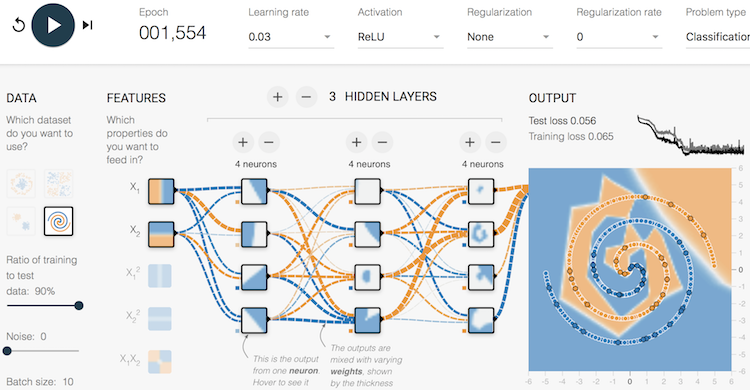

If you reduce the learning rate to 0.03 and play it again. The model will converge further and stops with a test loss of 0.056.

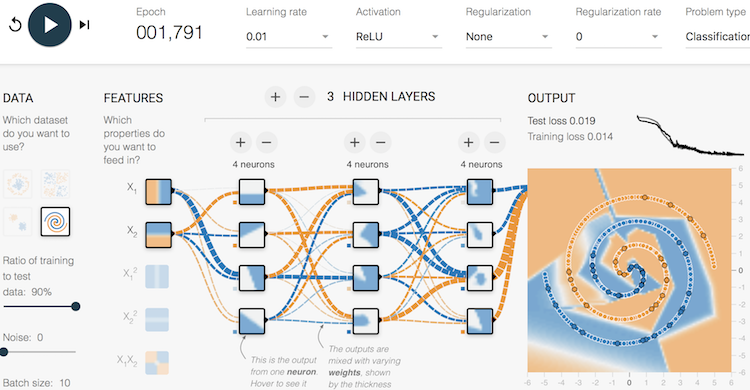

If you reduce the learning rate to 0.01 and play it again. The model will converge further and stops with a test loss of 0.019.

Conclusion, if a neural network model starts to generate an oscillating training loss, the learning rate can be reduced to help to a better solution.

Table of Contents

►Deep Playground for Classical Neural Networks

Impact of Extra Input Features

Impact of Additional Hidden Layers and Neurons

Impact of Neural Network Configuration

Impact of Activation Functions

Building Neural Networks with Python

Simple Example of Neural Networks

TensorFlow - Machine Learning Platform

PyTorch - Machine Learning Platform

CNN (Convolutional Neural Network)

RNN (Recurrent Neural Network)

GAN (Generative Adversarial Network)