Neural Network Tutorials - Herong's Tutorial Examples - v1.22, by Herong Yang

Impact of Extra Input Features

This section provides a tutorial example to show the impact of one extra input feature on a simple neural network model created on Deep Playground.

After learned how to use Deep Playground by building a simple neural network model in the previous tutorial, let's look the impact of an extra input feature in this tutorial.

1. Continue withe previous tutorial of a 1-layer model with linear activation function on the linear classification problem.

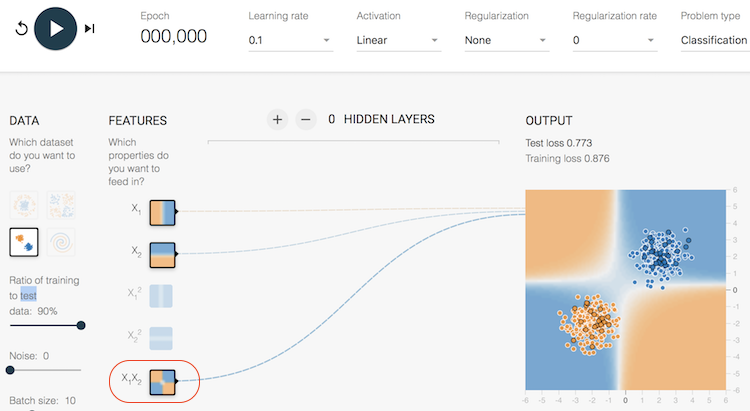

2. Click "X1X2" in the FEATURES list to add x1*x2 as the third input component in the model.

3. Click "reset" icon repeatedly until you see a poor initial weight matrix by looking at the output area as shown below. The initial weight matrix has a large positive value on the x1*x2 component, so the output is heavily impacted by the X1X2 input feature.

4. Click the "play one epoch" icon, the model improves quickly by updating the weight matrix with a smaller value on the x1*x2 component to reduce its impact on the output. Obviously, this extra input feature is bad for solving this linear problem.

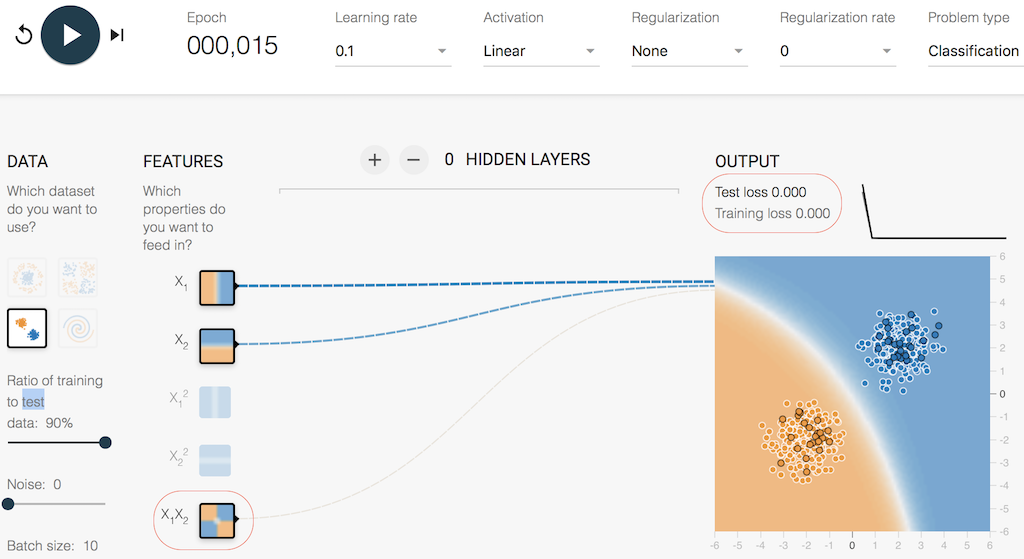

5. Click the "play one epoch" icon repeatedly, until the model is stabilized (when "training loss" become 0.000). After this point, playing more training epochs will not improve the model any more, since there is almost no training loss to generate changes on the weight matrix.

The picture below shows a case, in which 15 training epoch is enough to stabilize the model providing an arc-like solution to our linear problem. This is acceptable because our samples are concentrated in 2 local areas only. Any line, curved or straight, is a valid solution as long as it can separate the 2 sample areas.

If you reset the model with different initial weight matrices and train it again, the stabilized state will be close to the one shown above. It has two equal positive weights on X1 and X2 input features. The extra input feature, X1X2, is almost wiped out by a very small weight, represented by the the thin and grey link.

This suggests that the model with X1, X2 and X1X2 input features has only 1 solution.

You can continue to play with different extra input features or add all input features to see how the model behaves.

Table of Contents

►Deep Playground for Classical Neural Networks

►Impact of Extra Input Features

Impact of Additional Hidden Layers and Neurons

Impact of Neural Network Configuration

Impact of Activation Functions

Building Neural Networks with Python

Simple Example of Neural Networks

TensorFlow - Machine Learning Platform

PyTorch - Machine Learning Platform

CNN (Convolutional Neural Network)

RNN (Recurrent Neural Network)

GAN (Generative Adversarial Network)